When game engines are used for VR, they have to include many new capabilities: stereo rendering, higher frame rate, distortion correction, latency control and more. But one topic that is often overlooked is that VR game engine also have to deal with a wide variety of VR peripherals, each with their own API.

A non-VR engine primarily interfaces with a game controller, keyboard and mouse. These are well-understood peripherals with standard software interfaces. New types of keyboards, controllers and mice do appear on the market, but they share essentially identical interfaces to pre-existing devices.

In contrast, there is a much greater variety of VR devices: HMDs, motion and position trackers, hand and finger sensors, eye trackers, body suits, locomotion devices, force feedback devices, augmented reality cameras and more. Furthermore, each class of devices does not offer a common interface: working with a Leap Motion sensor is different than a Softkinetic one or an Intel Realsense one, even though the capabilities that they provide are similar.

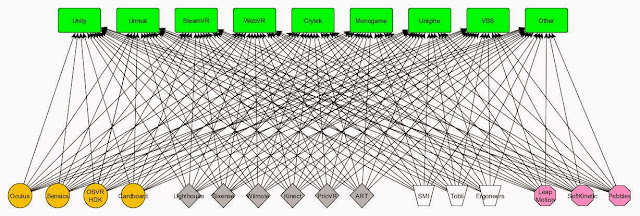

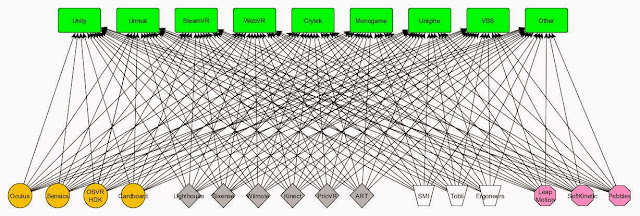

The result? An endless effort to keep up. Consider the diagram below, showing just a small selection of available VR engines and a small subset of VR devices

|

| VR devices and VR engines face a constant struggle to support each other |

Consider a Sixense STEM motion controller. The Sixense team would probably like to make the STEM available to a widest possible range of software engines so that a developer can use STEM regardless of their engine of choice. Same goes for every other peripheral. Conversely, an Unreal Engine developer wants to support the maximum reasonable number of devices so that the game can reach the maximum number of users. Every permutation of VR device and game engine may have value. For the device vendor, there is a need how to build, test, optimize and deploy plugins for various engines. For the engine vendor, there is constant pressure - from both game developers and hardware makers - to offer device support.

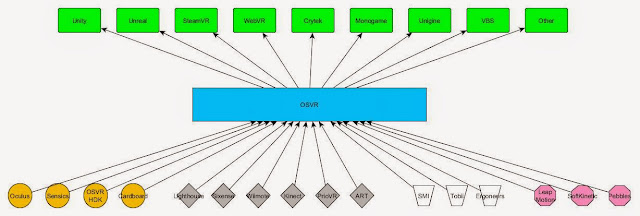

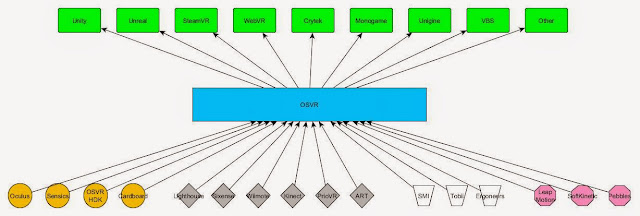

That's why every VR game engine needs a middleware abstraction layer like the OSVR SDK. OSVR factors devices into common interfaces - tracker, skeleton, imager, etc. - and then provides a standard device-independent interface to the game engine. Just like desktop scanners offer a TWAIN interface that applications can use regardless of the scanner vendor, OSVR offers optimized skeleton, eye tracker and other VR interfaces that work regardless of the underlying hardware.

|

VR Middleware like OSVR dramatically simplifies the device and engine support effort

Consider the advantages. With OSVR:

- A device vendor writes a single interface to OSVR and immediately gains access to high-performance plugins to a wide range of game engines. In the particular case of OSVR, these plugins are free and open-source.

- Instead of attempting to work with hundreds and vendors and devices, an engine company can work with the OSVR team to create an optimized interface for each particular type of device. As new devices of the same type enter the market, supporting this new device is as easy as writing an OSVR plugin. Engine companies do not need to go over the effort of teaching yet another hardware vendor how to work well with their engine. The effort can be focused on optimizing the OSVR driver.

- Game developers are spared the effort of selecting particular vendors. By using the OSVR plugin for their favorite game engines, a wide range of devices is supported out of the box.

- As new devices enter the market, games do no need to recompile and redistribute. In fact, that game might not even know that it is now supporting a new hardware vendor

- OSVR interfaces are defined collectively by industry leaders that understand the power of the abstraction layer. This is done in a transparent and open-source way.

- The number of engine and device combination increases, while the number of software interfaces to be written, debugged and optimized significantly decreases.

Game engines could still use vendor-specific function calls to activate some special functions of a peripheral, but the need to so quickly decreases.

Yet another advantage is the ability to add in-line analysis plugins to the data path. For instance, often times it is desired to convert hand and finger movements into higher-level gestures. The gesture engine could be vendor-specific, but that would limit its adoption in the game. It could be game-engine specific but that would limit market adoption of gestures as a user interface method. Or, it could be an OSVR plugin that works on top of all existing hand and finger sensors (using their standardized interface) and provides a standard gesture interface to many game engines. Furthermore, this gesture engine can be written by a company or a group that are experts in gestures, instead of forcing a hardware vendor or a game engine to master yet another technology.

Whether you make engines, hardware or smart analysis software, using VR middleware such as the OSVR API deserves serious consideration.

|

No comments:

Post a Comment